A 'Perceptive Robot' Earns Draper Spot as KUKA Innovation Award Finalist

CAMBRIDGE, MA—Despite their incredible precision and reliability, robots are unable to perform simple tasks when confronted with unstructured environments. While that hasn’t slowed down their popularity—some 233,000 robots are at work in the U.S. every day—it does limit them to tightly controlled environments that have no variation, like assembly lines and factories.

“Manipulating objects in the real world is so hard for robots because they are easily confused by the complexity of every-day environments,” said David M.S. Johnson, an Advanced Technology Program Manager at Draper. “To achieve robust, autonomous operation in unstructured environments, robots must be able to identify relevant objects and features in their surroundings, recognize the context of the situation and then plan their motions and interactions accordingly. They need to be more like humans and be able to adapt to our environments.”

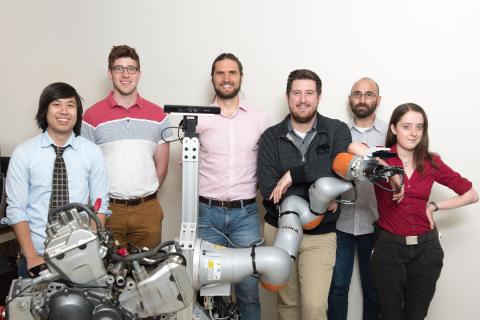

Johnson and fellow researchers from Draper, Harvard and MIT addressed this challenge by outfitting a robot with a new way to quickly and accurately perceive its environment and then make decisions and take action. Their robot uses a convolutional neural network—a kind of machine-learning technology—that enables it to estimate the size, shape and distance of objects in cluttered environments.

“Our robot is designed to learn,” said Johnson. “Anytime new data for a task is available, we can re-train the system and provide a new capability. Because we use off-the-shelf hardware, our software is simple-to-deploy and can reduce costs for traditional installations by requiring fewer modifications to the environment to accommodate the robot.”

The team is a collaboration between Draper, Prof. Russ Tedrake’s Robot Locomotion Group at MIT and Prof. Scott Kuindersma at the Agile Robotics Lab at the Harvard School of Engineering and Applied Sciences. A paper on their robotic system helped the researchers become finalists for the KUKA Innovation Award. Next April the group will visit KUKA in Germany to demonstrate their robot, which uses a KUKA flexFellow and Roboception camera, and show what they are calling “a dexterous manipulation platform which can assemble and disassemble mechanical parts like a human technician”—namely, the robot will change the oil on a motorcycle.

Johnson added that unlike many of the robots in use today, this system can be used in a wide range of applications and industries. “With this system, small and medium-sized enterprises currently underserved by automation solutions could have fewer barriers to automating their activities, require less costly installation and service contracts from third party providers and more rapidly adopt automation technology. Because our robots can operate in unstructured environments, we are excited to explore novel applications for them in warehousing, maintenance, construction, assembly and retail.”

Draper’s entry for the KUKA Innovation Award follows years of research, prototype development, licensing and delivery of robotics and autonomy systems. Draper’s robotics portfolio includes development of artificial intelligence software that is capable of predicting a human user’s needs and tailoring the robot’s interface to create a personalized experience. Draper has developed robots to service satellites in Earth’s orbit and equipped fast lightweight aerial vehicles so that they are capable of autonomously navigating GPS-denied environments, like dense forests and warehouses.

Released August 30, 2017